Catalog Size Inspection#

In this notebook, we look at methods to explore the size of the parquet files in a hipscat’ed catalog.

This can be useful to determine if your partitioning will lead to imbalanced datasets.

Author: Melissa DeLucchi (delucchi@andrew.cmu.edu)

Fetch file sizes#

First, we fetch the size on disk of all the parquet files in our catalog. This stage may take some time, depending on how many partitions are in your catalog, and the load characteristics of your machine.

[1]:

import hipscat

import os

### Change this path!!!

catalog_dir = "../../tests/data/small_sky_order1"

### ----------------

### You probably won't have to change anything from here.

catalog = hipscat.read_from_hipscat(catalog_dir)

info_frame = catalog.partition_info.as_dataframe()

for index, partition in info_frame.iterrows():

file_name = result = hipscat.io.paths.pixel_catalog_file(

catalog_dir, partition["Norder"], partition["Npix"]

)

info_frame.loc[index, "size_on_disk"] = os.path.getsize(file_name)

info_frame = info_frame.astype(int)

info_frame["gbs"] = info_frame["size_on_disk"] / (1024 * 1024 * 1024)

Summarize pixels and sizes#

healpix orders: distinct healpix orders represented in the partitions

num partitions: total number of partition files

Size on disk data - using the file sizes fetched above, check the balance of your data. If your rows are fixed-width (e.g. no nested arrays, and few NaNs), the ratio here should be similar to the ratio above. If they’re very different, and you experience problems when parallelizing operations on your data, you may consider re-structuring the data representation.

min size_on_disk: smallest file (in GB)

max size_on_disk: largest file size (in GB)

size_on_disk ratio: max/min

total size_on_disk: sum of all parquet catalog files (actual catalog size may vary due to other metadata files)

[2]:

print(f'healpix orders: {info_frame["Norder"].unique()}')

print(f'num partitions: {len(info_frame["Npix"])}')

print("------")

print(f'min size_on_disk: {info_frame["gbs"].min():.2f}')

print(f'max size_on_disk: {info_frame["gbs"].max():.2f}')

print(f'size_on_disk ratio: {info_frame["gbs"].max()/info_frame["gbs"].min():.2f}')

print(f'total size_on_disk: {info_frame["gbs"].sum():.2f}')

healpix orders: [1]

num partitions: 4

------

min size_on_disk: 0.00

max size_on_disk: 0.00

size_on_disk ratio: 1.07

total size_on_disk: 0.00

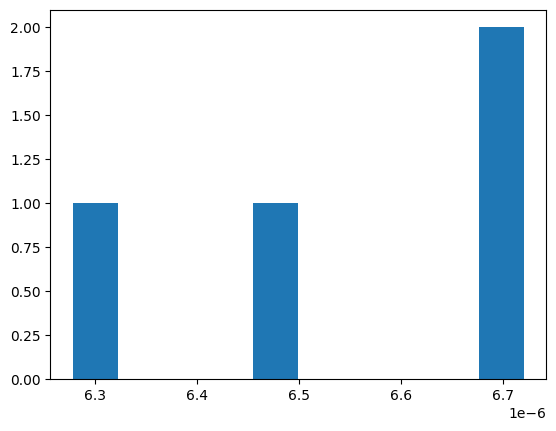

File size distribution#

Below we look at histograms of file sizes.

In our initial testing, we find that there’s a “sweet spot” file size of 100MB-1GB. Files that are smaller create more overhead for individual reads. Files that are much larger may create slow-downs when cross-matching between catalogs. Files that are much larger can create out-of-memory issues for dask when loading from disk.

The majority of your files should be in the “sweet spot”, and no files in the “too-big” category.

[3]:

import matplotlib.pyplot as plt

import numpy as np

plt.hist(info_frame["gbs"])

bins = [0, 0.5, 1, 2, 100]

labels = ["small-ish", "sweet-spot", "big-ish", "too-big"]

hist = np.histogram(info_frame["gbs"], bins=bins)[0]

pcts = hist / len(info_frame)

for i in range(0, len(labels)):

print(f"{labels[i]} \t: {hist[i]} \t({pcts[i]*100:.1f} %)")

small-ish : 4 (100.0 %)

sweet-spot : 0 (0.0 %)

big-ish : 0 (0.0 %)

too-big : 0 (0.0 %)